January 09, 2023

EAM - the eyes of the INTREPID system.

The EAM is the Environmental Assessment Module, the assessment tool for the INTREPID project.

What is the EAM?

The INTREPID project is not only a European project, it is also a collaborative project. Several companies work together to enrich the system. INMOS is the sum of many modules. Among them, the EAM, or Environmental Assessment Module, is considered the eyes of the INTREPID system.

Indeed, the purpose of the EAM is to assess the environment. It detects, recognises and locates crucial elements of the area, in order to help the End-Users (EU) to make important decisions.

To do this, the EAM collects information from different types of sensors (RGB camera, LiDAR, ...), placed on robotic agents (UAV, UGV) and first responders (FR). Then, through Artificial Intelligence and deep learning networks, it processes each sensor's data to extract features and perform detection.

The EAM detecting fire on a car during Pilot 2 test.

A collaborative project

The EAM is part of a whole, and in order to function properly, it needs other modules. Indeed, to geolocate the data collected by the various sensors, the RTPM (Real Time Positioning Module) is indispensable. It is the combination of these two modules that allows geolocation detection.

On the other hand, other modules use EAM to improve their productivity:

- PPM (Path Planning Module) takes detection into account,

- EMM (Environment Mapping Module) uses it to perform the 3D mapping function,

- IAM (Intelligence Amplification Module) uses it to produce the recommendation and mission, linked to detection.

Disaster conditions

By disaster we mean a situation where there may be fire, heat, rubble, all of these conditions are factors that make the environment what we call "harsh".

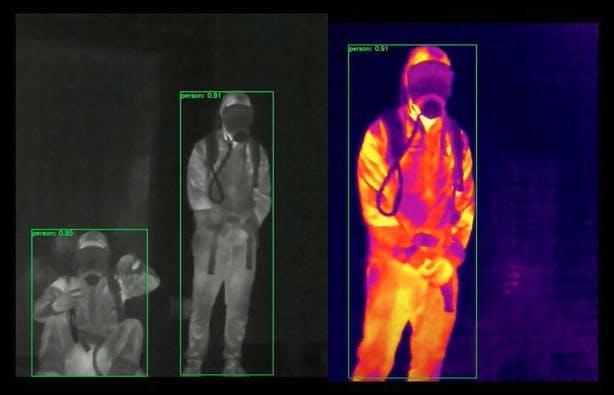

This makes detection much more difficult. But, as the information comes from different sources (it is a multi-modal input), the module can handle some sensor limitations, if a sensor fails to detect an object, the EAM can still ensure the detection by exploiting the other sensors. For example, having an infrared camera sensor and a LiDAR can help when the RGB camera is not very effective due to poor lighting or smoke.

The deep learning methods used by the model to fuse the data from these sensors, also address this issue.

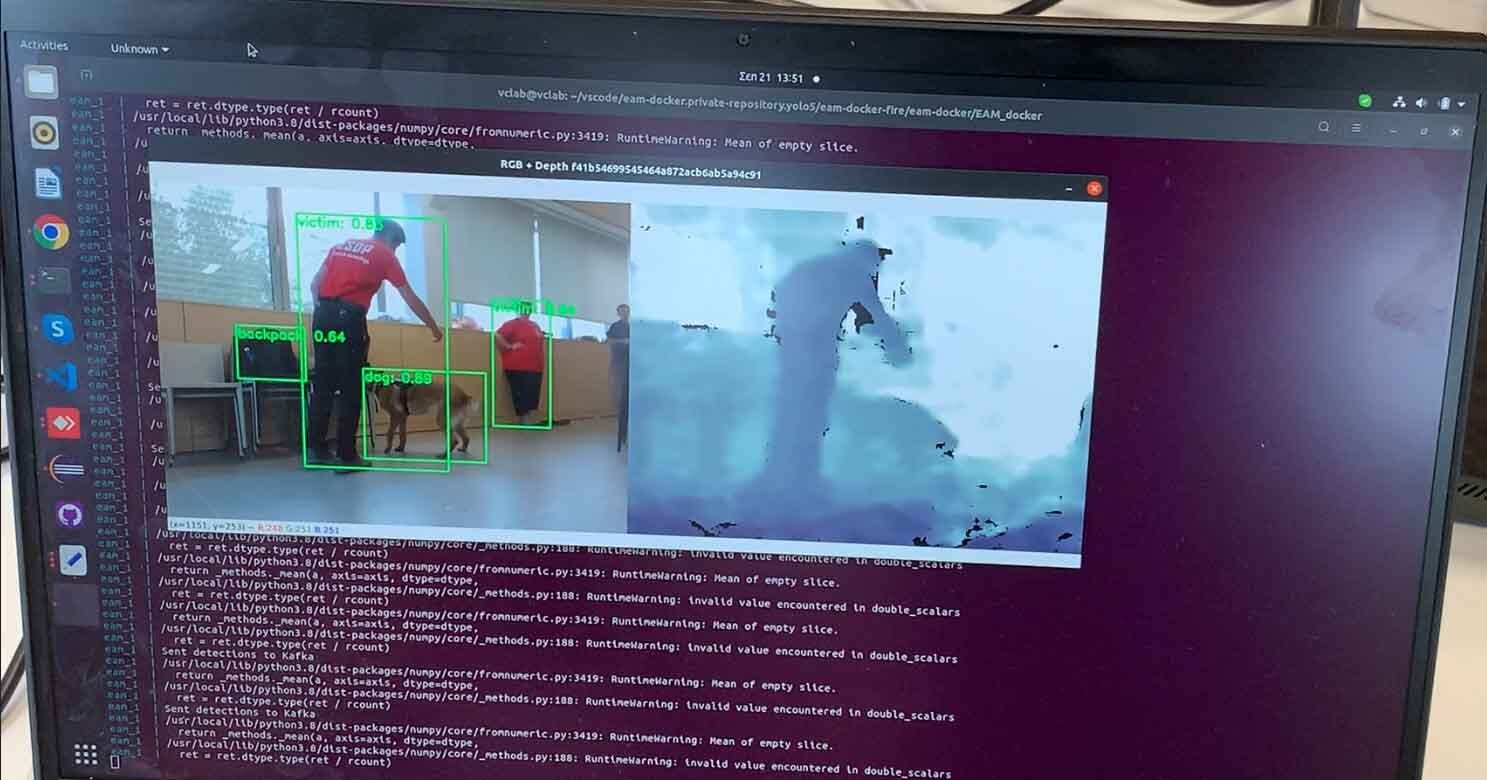

The EAM detecting several items such as a backpack, or a dog.

Pilot 2 steps

INTREPID is often tested at different stages. For the moment, the Alpha version of the EAM is already available and has been tested in the first pilot, where it has been integrated into the overall system and has shown its ability to detect victims.

Our consortium partner CERTH, responsible for the EAM, tested the beta version in the second pilot in Marseille.

New features were added:

- Extending the detection capabilities of EAM with a list of objects of interest, defined with the EU (backpack, hazardous materials, ...).

- Research on multimodal detection of 3D objects, by merging 3D LiDAR data and RGB data.

- Research on the tracking of non-rigid objects (smoke, fire).

IR (Infrared) detections

Want access to exclusive INTREPID content? 💌 Subscribe to our newsletter! 💌